The New Year is here, which means it’s time to relax a little and venture into something interesting, if hypothetical. In this holiday-edition NeuroNugget, we will review the current state of deep learning… but from the point of view of artificial general intelligence (AGI). When are we going to achieve AGI? Is there even any hope? Let’s find out! We begin with a review of how close various fields of deep learning currently are to AGI, starting with computer vision.

Deep Learning: Will the Third Hype-Wave be the Last?

Recently, I’ve been asked to share my thoughts on whether current progress in deep learning can lead to developing artificial general intelligence (AGI), in particular, human-level intelligence, anytime soon. Come on, researchers, when will your thousands of papers finally get us to androids dreaming of electric sheep? And it so happens that also very recently a much more prominent researcher, Francois Chollet, decided to share his thoughts on measuring and possibly achieving general intelligence in a large and very interesting piece, On the Measure of Intelligence. In this new mini-blog-series, I will try to show my vision of this question, give you the gist of Chollet’s view, and in general briefly explain where we came from, what we have now, and whether we are going in the direction of AGI.

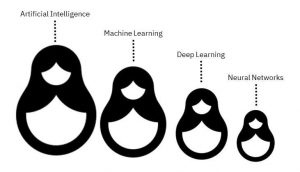

In this blog, we have talked many times about the third “hype-wave” of artificial intelligence that began in 2006–2007, and that encompasses the deep learning revolution that fuels most of the wonderful AI advances we keep hearing about. Deep learning means training AI architectures based on deep neural networks. So the industry has come full circle: artificial neural networks were actually the first AI formalism, developed back in the 1940s when nobody even thought of AI as a research field.

Actually, there have been two full circles because artificial neural networks (ANNs) have fueled both previous “hype waves” for AI. Here is a nice illustration from (Jin et al., 2018):

The 1950s and 1960s saw the first ANNs implemented in practice, including the famous Perceptron by Frank Rosenblatt, which was actually a one-neuron neural network, and it was explicitly motivated as such (Dr. Rosenblatt was a neurobiologist and a psychologist, among other things). The second wave came in the second half of the 1980s, and it was mostly caused by the popularization of backpropagation — people suddenly realized they could compute (“propagate”) the gradient of any composition of elementary functions, and it looked like the universal “master algorithm” that would unlock the door to AI. Unfortunately, neither the 1960s nor the 1980s could supply researchers with sufficient computational power and, even more importantly, sufficient data to make deep neural networks work. Here is the Perceptron by Frank Rosenblatt in its full glory; for more details see, e.g., here:

Neural networks did work in the mid-2000s, however. Results coming from the groups of Geoffrey Hinton, Yoshua Bengio, and Yann LeCun — they each had different approaches but were all instrumental for the success of deep learning — made neural networks go from being “the second best way of doing just about anything” (a famous quote from the early 1990s usually attributed to John Denker) to an approach that kept revolutionizing one field of machine learning after another. Deep learning was, in my opinion, as much a technological revolution as a mathematical one, and it was made possible by the large datasets and new computational devices (GPUs, for one) that became available in the mid-2000s. Here is my standard illustration for the deep learning revolution (we’ll talk about the guy on the right later):

It would be impossible to give a full survey of the current state of deep learning in a mere blog post; by now, that would require a rather thick book, and unfortunately this book has not yet been written. But in this first part of our AGI-related discussion, I want to give a brief overview of what neural networks have achieved in some important fields, concentrating on comparisons with human-level results. Are current results paving the road to AGI or just solving minor specific problems with no hope for advanced generalization? Are we maybe even already almost there?

Computer Vision: A Summer Project Gone Wrong

Computer vision is one of the oldest and most important fields of artificial intelligence. As usual in AI, it began with a huge understatement. In 1966, Seymour Papert presented the Summer Vision Project; the idea was to use summer interns in MIT to solve computer vision, that is, solve segmentation and object detection in one fell swoop. I’m exaggerating, but only a little; here is the abstract as shown in the memo (Papert, 1966):

This summer project proved to last for half a century and counting. Even current state of the art systems do not entirely solve such basic high-level computer vision problems as segmentation and object detection, let alone navigation in the 3D world, scene reconstruction, depth estimation and other more ambitious problems. On the other hand, we have made excellent progress, and human vision is also far from perfect, so maybe our current progress is already sufficient to achieve AGI? The answer, as always, is a resounding yes and no, so we need to dig a little deeper.

Current state of the art computer vision systems are virtually all based on convolutional neural networks (CNN). The idea of CNNs is very old: it was inspired by the studies of the visual cortex made by Hubel and Wiesel back in the 1950s and 1960s and implemented in AI by Kunihiko Fukushima in the late 1970s (Fukushima, 1980). Fukushima’s paper is extremely modern: it has a deep multilayer architecture, intended to generalize from individual pixels to complex associations, with interleaving convolutional layers and pooling layers (Fukushima had average pooling, max-pooling came a bit later). A few figures from the paper say it all:

Since then, CNNs have been a staple of computer vision; they were basically the only kind of neural networks that continued to produce state of the art results through the 1990s, importantly in LeCun’s group. Still, in the 1990s and 2000s the scale of CNNs that we could realistically train was insufficient to get real breakthroughs.

The deep learning revolution came to computer vision in 2010–2011, when researchers learned to train neural networks on GPUs. This significantly expanded the possibilities of deep CNNs, allowing for much deeper networks with much more units. The first problem successfully solved by neural networks was image classification. A deep neural network from Jurgen Schmidhuber’s group (Ciresan et al., 2011) won a number of computer vision competitions in 2011–2012, beating state of the art classical solutions. But the first critically acclaimed network that marked the advent of deep learning in computer vision was AlexNet, coming from Geoffrey Hinton’s group (Krizhevsky et al., 2017), which won the ILSVRC 2012 competition on large-scale image classification based on the ImageNet dataset. It was a large network that fit on two GPUs and took a week to train.

But the results were astonishing: in ILSVRC 2012, the second place was taken by the ISI team from Japan with a classical approach, achieving 26.2% classification error (out of five attempts); AlexNet’s error was 15.3%…

Since then, deep convolutional networks became the main tool of computer vision for practically all tasks: image classification, object detection, segmentation, pose detection, depth estimation, video processing, and so on and so forth. Let us briefly review the main ideas that still contribute to state of the art architectures.

Architectural Ideas of Convolutional Networks

AlexNet (Krizhevsky et al., 2017) was one of the first successful deep CNNs, with more than 20 layers; moreover, AlexNet features model parallelization, working on two GPUs at once:

At the time, it was a striking engineering feat but by now it has become common practice and you can get a distributed model with just a few lines of code in most modern frameworks.

New models appearing in 2013–2015 supplemented the main idea of a deep CNN with new architectural features. The VGG series of networks (Simonyan, Zisserman, 2014) represented convolutions with large receptive fields as compositions of 3х3 convolutions (and later 1xn and nx1 convolutions); this allows us to achieve the same receptive field size with fewer weights, while improving robustness and generalization. Networks from the Inception family (Szegedy et al., 2014) replaced basic convolutions with more complex units; this “network in network” idea is also widely used in modern state of the art architectures.

One of the most influential ideas came with the introduction of residual connections in the ResNet family of networks (He et al., 2015). These “go around” a convolutional layer, replacing a layer that computes some function F(x) with the function F(x)+x:

This was not a new idea; it had been known since the mid-1990s in recurrent neural networks (RNN) under the name of the “constant error carousel”. In RNNs, it is a requirement since RNNs are very deep by definition, with long computational paths that lead to the vanishing gradients problem. In CNNs, however, residual connections led to a “revolution of depth”, allowing training of very deep networks with hundreds of layers. Current architectures, however, rarely have more than about 200 layers. We simply don’t know how to profit from more (and neither does our brain: it is organized in a very complex way but common tasks such as object detection certainly cannot go through more than a dozen layers).

All of these ideas are still used and combined in modern convolutional architectures. Usually, a network for some specific computer vision problem contains some backbone network that uses some or all of the ideas above, such as the Inception Resnet family, and perhaps introduces something new, such as the dense inter-layer connections in SqueezeNet or depthwise separable convolutions in the MobileNet family designed to reduce the memory footprint.

This post does not allow enough space for an in-depth survey of computer vision, so let me just make an example of one specific problem: object detection, i.e., the problem of localizing objects in a picture and then classifying them. There are two main directions here (see also a previous NeuroNugget). In two-stage object detection, first one part of the network generates a proposal (localization) and then another part classifies objects in the proposals (recognition). Here the primary example is the R-CNN family, in particular Faster R-CNN (Ren et al., 2015), Mask R-CNN (He et al., 2018), and current state of the art CBNet (Liu et al., 2019). In one-stage object detection, localization and recognition are done simultaneously; here the most important architectures are the YOLO (Redmon et al., 2015; 2016; 2018) and SSD (Liu et al., 2015) families; again, most of these architectures use some backbone (i.e., VGG or ResNet) for feature extraction and augment it with new components that are responsible for localization. And, of course, there are plenty of interesting ideas, with more coming every week: a recent comprehensive survey of object detection has more than 300 references (Jiao et al., 2019).

No doubt, modern CNNs have made a breakthrough in computer vision. But what about AGI? Are we done with the vision component? Let’s begin with the positive side of the issue.

Superhuman Results in Computer Vision

From the very beginning of the deep learning revolution in computer vision, results have appeared that show deep neural networks outperform people in basic computer vision tasks. Let us briefly review the most widely publicized results, some of which we have actually already discussed.

The very first deep neural net that overtook its classical computer vision predecessors was developed by Dan Ciresan under the supervision of Jurgen Schmidhuber (Ciresan et al., 2011) and was already better than humans! At least it was in some specific, but quite important tasks: in particular, it achieved superhuman performance in the IJCNN Traffic Sign Recognition Competition. The average recognition error of human subjects on this dataset was 1.16%, while Ciresan’s net achieved 0.56% error on the test set.

The main image classification dataset is ImageNet, featuring about 15 million images that come from 22,000 classes. If you think that humans should have 100% success rate on a dataset that was, after all, collected and labeled by humans, think again. During ImageNet collection, the images were validated with a binary question “is this class X?”, quite different from the task “choose X with 5 tries out of 22000 possibilities”. For example, in 2014 a famous deep learning researcher Andrej Karpathy wrote a blog post called “What I learned from competing against a ConvNet on ImageNet”; he spent quite some time and effort and finally evaluated his Top-5 error rate to be about 5% (for a common ImageNet subset with 1,000 classes).

You can try it yourself, classifying the images below (source); and before you confidently label all of them “doggie”, let me share that ImageNet has 120 different dog breeds:

The first network that claimed to achieve superhuman accuracy on this ImageNet-1000 dataset was the above-mentioned ResNet (He et al., 2015). This claim could be challenged, but by now the record Top-5 accuracy for CNN architectures has dropped below 2% (Xie et al., 2019), which is definitely superhuman, so this is another field where we’ve lost to computers.

But maybe classifying dog breeds is not quite what God and Nature had in mind for the human race? There is one class of images that humans, the ultimate social animals, are especially good at recognizing: human faces. Neurobiologists argue that we have special brain structures in place for face recognition; this is evidenced, in particular, by the existence of prosopagnosia, a cognitive disorder in which all visual processing tasks are still solved perfectly well, and the only thing a patient cannot do is recognize faces (even their own!).

So can computers beat us at face recognition? Sure! The DeepFace model from Facebook claimed superhuman face recognition as early as 2014 (Taigman et al., 2014), FaceNet (Schroff et al., 2015) came next with further improvements, and the current record on the standard Labeled Faces in the Wild (LFW) dataset us 99.85% (Yan et al., 2019), while human performance on the same problem is only 97.53%.

Naturally, for more specialized tasks, where humans need special training to perform well at all, the situation is even better for the CNNs. Plenty of papers show superhuman performance on various medical imaging problems; for example, the network from (Christiansen et al., 2018) recognizes cells on microscopic images, estimates their size and status (whether a cell is dead or alive) much better than biologists, achieving, for instance, 98% accuracy on dead/alive classification as compared to 80% achieved by human professionals.

As a completely different sample image-related problem, consider geolocation, i.e., recognizing where a photo was taken based only on the pixels in the photo (no metadata). This requires knowledge of common landmarks, landscapes, flora, and fauna characteristic for various places on Earth. The PlaNet model, developed in Google, already did it better than humans back in 2016 (Weyand et al., 2016); here are some sample photos that the model localizes correctly:

With so many results exceeding human performance, can we say that computer vision is solved by now? Is it a component ready to be plugged into a potential AGI system?

Why Computer Vision Is Not There Yet

Unfortunately, no. One sample problem that to a large extent belongs to the realm of computer vision and has obvious huge economical motivation is autonomous driving. There are plenty of works devoted to this problem (Grigorescu et al., 2019), and there is no shortage of top researchers working on it. But, although, say, semantic segmentation is a classical problem solved quite readily by existing models, there are still no “level 5” autonomous driving models (this is the “fully autonomous driving” level from the Society of Automotive Engineers standard).

What is the problem? One serious obstacle is that the models that successfully recognize two-dimensional images “do not understand” that these images are actually projections of a three-dimensional world. Therefore, the models often learn to actively use local features (roughly speaking, textures of objects), which then hinders generalization. A very difficult open problem, well-known to researchers, is how to “tell” the model about the 3D world around us; this probably should be formalized as a prior distribution, but it is so complex that nobody yet has good ideas on what to do about it.

Naturally, researchers have also developed systems that work with 3D data directly; in particular, many prototypes of self-driving cars have LiDAR components. But here another problem rears its ugly head: lack of data. It is much more difficult and expensive to collect three-dimensional datasets, and there is no 3D ImageNet at present. One promising way to solve this problem is to use synthetic data (which is naturally three-dimensional), but this leads to the synthetic-to-real domain transfer problem, which is also difficult and also yet unsolved (Nikolenko, 2019).

The second problem, probably even more difficult conceptually, is the problem-setting itself. Computer vision problems where CNNs can reach superhuman performance are formulated as problems where models are asked to generalize the training set data to a test set. Usually the data in the test set are of the same nature as the training set, and even models that make sense in the few-shot or one-shot learning setting usually cannot generalize freely to new classes of objects. For example, if a segmentation model for a self-driving car has never seen a human on a Segway in its training set, it will probably recognize the guy as a human but will not be able to create a new class for this case and learn that humans on Segways move much faster than humans on foot, which may prove crucial for the driving agent. It is quite possible (and Chollet argues the same) that this setting represents a conceptually different level of generalization which requires completely novel ideas.

Today, we have considered how close computer vision is to a full-fledged AGI component. Next time, we will continue this discussion and talk about natural language processing: how far are we from passing the Turing Test? There will be exciting computer-generated texts but, alas, much fewer superhuman results there…

Sergey Nikolenko

Chief Research Officer, Neuromation