Processes are an essential part of any project — whether you are planning to renovate your shed or bring a new credit scoring algorithm to the production.

Yet, when it comes to machine learning processes are often the weakest link that causes many projects to fail. Per Gartner, over 85% of AI projects, launched between today and 2022, will deliver subpar results due to issues with data, models, or people in charge of managing them.

Given such a skeptical prognosis, it makes sense why most leaders today are looking into MLOps platforms.

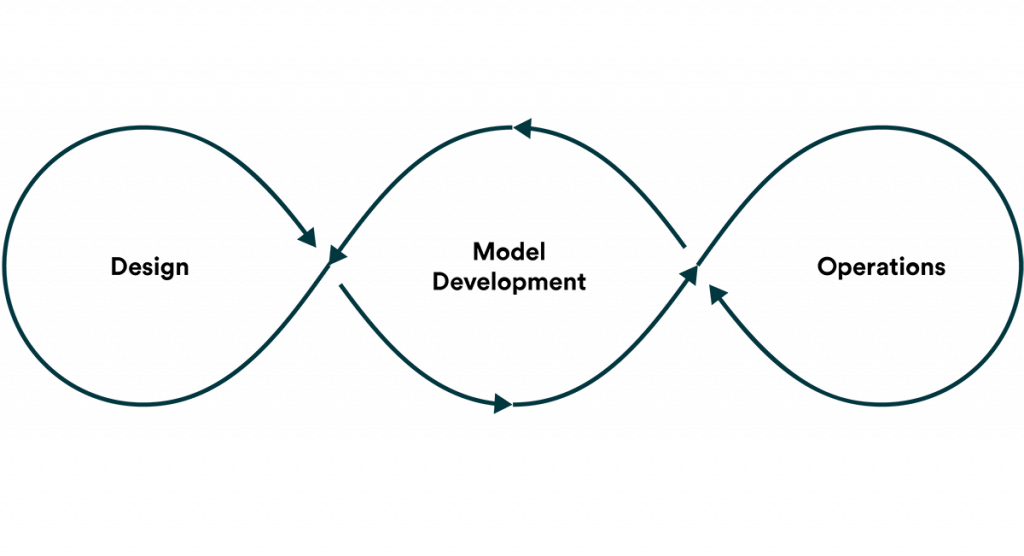

An MLOps platform is a consolidated infrastructure hub of resources, tools, frameworks, and process automation for managing all steps of the machine learning life cycle.

To support scalable machine learning use cases, your infrastructure must include:

- Data connectors and ingestion pipelines

- Model catalog, versioning, and validating environment

- Model testing and deployment pipelines

- Operational toolkit for managing cloud costs and CI/CD

- Model management and governance tools

- Overarching security facets to protect your assets

These characteristics translate into the following set of must-have features for an MLOps platform.

1. MLOps platform: Data Management and Labelling

Good data is key to successful machine learning projects. But as we all know, getting ahold of it is often an exercise in patience.

While an MLOps platform cannot miraculously organize your business data in all the right places, it can help you set baseline standards for operationalizing the trapped insights. Some vendors such as Google Cloud provide blueprints for building data management pipelines in conjunction with other tools. Others, such as Neu.ro MLOps Platform, can set up a proper data management infrastructure for you, plus provide data augmentation and data labeling services for an extra cost.

2. Experiment Tracking

The beauty (and the challenge) of machine learning is that you have a multitude of methods and approaches to experiment with.

However, you also need to keep close tabs on how your model performance changes under different conditions pertaining to training methods, datasets, validation methods, and more.

An MLOps platform, in this case, acts as a single source of truth for managing and monitoring all your ML work.

Some of the handy features include:

- Collaborative environment for data preparation, experiment tracking, model validation, and re-training.

- Quick overview of the key metrics such as accuracy, loss, and drift among others.

- Secure model and data storage.

- Model performance analytics to provide quality insights for debugging.

- Model versioning and replication functionality.

- Ability to invite external collaborators and easily share your models.

3. Pre-installed Frameworks and Libraries

Demand breeds supply. With the rising interest in ML/AI, data scientists can now choose between an array of open-source and proprietary frameworks and libraries for their projects. On the other hand, the usage of different tools among your team members can lead to tech fragmentation, which makes model replication challenges.

An MLOps platform helps ensure that everyone has access to the tools they need and favor, plus offers an ability to configure custom toolkits.

At the very least, you should consider a platform that supports:

- TensorFlow/TensorRT

- Keras

- PyTorch

- Catalyst

- APEX Caffe2

- H2O Numpy

- Spark Scikit-learn

4. Training Pipelines

Model training (and re-training) can eat up a good chunk of productive time if you need to constantly schedule it manually, plus provision the needed data and infrastructure for those jobs. Menial errors will inevitably creep in.

While not every MLOps platform comes with pre-made training pipelines, a growing cohort of vendors provide the following features:

- A selection of reusable components and automation triggers for assembling custom training pipelines.

- CI/CD tools for scheduling, task queuing, and smart alerts.

- Integrations with popular container services and orchestration of containerized batch jobs.

- Functionality for composing code, runtimes, artifacts, triggers, and data streams, among other things.

All of the above makes your model training more deterministic and allows you to run continuous training.

5. Model Registry

A model registry is a centralized repo for hosting all the important artifacts for production-ready models. Some MLOps platforms feature ready-to-use model registries. Others provide you with a toolkit for assembling one.

At the very least, a solid model registry needs to include:

- Training data including model type, key artifacts, features, and creation time.

- Training parameters and hyperparameters associated with the current version.

- Key performance metrics (e.g. accuracy, precision, recall).

In addition, your registry should store any extra data you need to effectively deploy the model in runtime.

6. Model Deployment

Machine learning models burn fail in production for an array of reasons — lack of portability, scalability issues, conflicts with target environment, and so on.

MLOps platforms promise to add more predictability into deployments by:

- Stitching together model development and deployment pipelines to mitigate inconsistency issues.

- Leveraging container clusters to organize different production-ready models.

- Creating custom rules for auto-deployment schemes.

In short, an MLOps platform helps adapt the core principles of CD/CI to machine learning projects.

MLOps platform: To Conclude.

As with other tech investments, the key to selecting the optimal MLOps platform is a formalized list of requirements.

As you shop around for vendors, ask yourself these questions:

- Will this ML solution reduce development risks?

- Does our choice integrate or can be adapted to our current workflows?

- Will it help reduce the time spent on infrastructure setup and management?

- Can this MLOps platform improve collaboration among team members?

And lastly — will our prime choice accelerate the delivery of ML value?