Data science as a job has been glamourized a lot in pop culture. But in reality, we know that a lot of our time goes towards the mundane tasks — data cleansing, GPU/CPU solicitations, meddling with target environments, and so forth. Just a few precious hours per day are actually allocated towards the “cool beans” — model development and performance testing.

That’s pretty unfair, right? Soundly, we are not the only ones to think so. A lot of people recognize the need for a more “industrialized” approach to ML/DL. And that’s what MLOps platforms promise to deliver.

What is MLOps and Why Do We Need It?

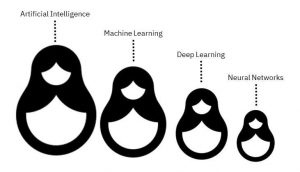

MLOps is a new movement to productize the development and deployment of machine learning models, much like DevOps does. The main MLOps practices are aimed at improving alignment and collaboration between data scientists and Ops professionals, responsible for model deployment and management, at different stages of the machine learning lifecycle.

The need for a better approach to managing ML and DL projects is much overdue. Per Algorithmia, 22% of teams need 1-3 months to move a new ML model to production. And another 18% require 3+ months. Time-to-market is just one factor. Model failure rates at the deployment stage are paramount too (as you likely know first hand). In most cases, models crash due to poorly configured target environments, missing model documentation, and under-training among other factors. MLOps best practices are aimed at preventing such issues by introducing a greater degree of automation, continuity, and predictability.

What is Continuous Machine Learning?

Continuous Machine Learning (CML) is aimed at introducing CI/CD best practices into the machine learning model lifecycle. Some of the best MLOps platforms provide the tools for:

- Facilitating data management, governance, and storage.

- Automating cloud infrastructure provisioning and environment setup.

- Creating (semi-) automated training and deployment pipelines.

- Introducing metrics for measuring model training, performance, and drift over time.

How Can an MLOps Platform Help Me With My Work?

An MLOps platform optimizes training, deployment, management, and maintenance of ML/DL models.

So that you could spend less time on “prep” legwork — data management, infrastructure, toolkit, and environment configuration — and focus on model development.

MLOps platforms enable data scientists to:

- Rely on automated pipelines and processes

- Access a pre-configured set of tools, libraries, and frameworks

- Connect new data streams rapidly

- Store, document, and manage different model versions

- Unify data and model governance

- Collaborate with others in shared workspaces

Thanks to a great degree of standardization, you can run more experiments and deliver production-ready models faster.

What Features Should I Look for in an MLOps Platform?

In 2019, the market for MLOps Solutions was a “modest” $350 million. By 2025, it’s projected to reach $4 billion. What this data is telling us is that there’s going to be a lot of new MLOps platforms “up for grabs” soon!

However, the market for MLOps solutions today is rather disjointed with a number of open-sources, managed, and SaaS/PaaS options available. So it follows that the capabilities vary a lot too.

While your choice of the MLOps platform should align with the ML use cases you plan to pursue, at the very basic level look for the following features:

- Data management — prioritize platforms, providing reference data management architecture and supporting tools for creating it. Some vendors, such as Neu.ro MLOps Platform also provide data augmentation and data labeling services, on top of data management capabilities.

- Collaboration workspaces — MLOps attempts to break silos between different teams (and people) working on AI projects. Respectively, you should look into platforms that have attractive GUI for organizing, tracking, and sharing your experiments. Model version control is another “must-have” feature that will save you heaps of time (and prevent miscommunication and model misusage).

- Pre-trained pipelines — Not every MLOPs platform provides ready-to-use training pipelines. But a good number of them offer “toolkits” for creating custom model training pipelines from reusable code components. Also, consider vendors providing native integration with popular container services (Kubernetes, Docker), plus native (or easy-to-integrate) CI/CD tools for job scheduling, queuing, and smart alerts setup.

- Extensions — Your MLOps platform shouldn’t stand in the way of your ability to scale. Thus, consider vendors that can be extended with custom libraries, frameworks, and other third-party tools that you may require for new types of projects.

This post provides a deeper dive into the best-rated AI tools and platforms in 2021.

I’m Sold! What’s Next?

As stated above, MLOps platforms come in different shape. So you’ll need to make your pick first:

- Open-source solutions — highly customizable and extensible, but may require some “hack-togethers” to match your project needs.

- Off-the-shelf proprietary platforms — end-to-end platforms, offering a range of native capabilities for data management, experiment tracking, model training (re-training) and deployments.

- Managed MLOps platforms — a “middleground” option, enabling you to select your MLOps stack and have the vendor configure and upkeep it for you. Neu.ro is among the few service providers, offering this option.

In essence, your choice of an MLOps platform will depend on how much customization you’d like to have versus how much time (and resources) you can afford to spend on fiddling the tech.